On this page:

3. Text embedding with Tensorflow

4. Integrating ElasticSearch with Python

5. Creating a custom score function for search

Nowadays, we often opt to match user preferences by using personalized ads, recommending them products similar to ones they searched for before. This sounds really challenging to code, but with some basic knowledge of Python and Elasticsearch, a simple version of text similarity search can be implemented in your project in just a few steps.

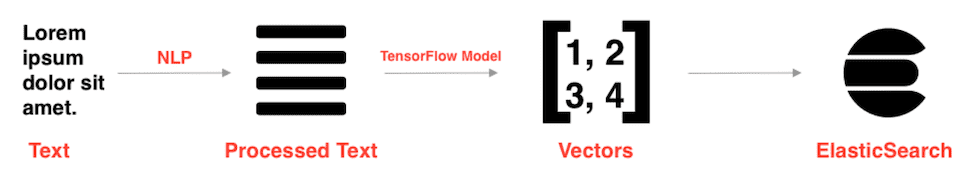

Top level overview of text similarity

Our solution will consist of following components:

1. NLP using some Python code to do text preprocessing of product’s description.

2. TensorFlow model from TensorFlow Hub to construct a vector for each product description. Comparing vectors will allow us to compare corresponding products for their similarity.

3. ElasticSearch to store vectors and use native Cosine similarity algorithm to quickly find most similar vectors.

Text processing

Depending on the text you are going to perform the search on, text processing can be different. Text processing refers to formatting, manipulating, and performing other actions on the text and we will need to do it before we calculate the vectors,

For example, you can remove stopwords like “you”, “and”, “is” etc. from your sentence. In my case, I also decided to replace all the language-specific letters with normal ones; é to e, ü to u, and so on.

For NLP, the most popular Python library is NLTK. I used it to remove stopwords, but it can do so much more. I recommend to fiddle with it a bit and find out when you get the best and most suitable scores. To replace language-specific letters I used a small library called unidecode.

Text embedding with Tensorflow

Okay, so we need to use text embedding to calculate the vectors and insert them into the Elasticsearch index to use them for our search queries. Tensorflow is going to help us with that. We don’t actually need to train our model by ourselves, on Tensorflow Hub we can choose from many models, already trained and ready to use.

There is a wide range of models available, and just like before we need to choose one that suits our needs. In my case, with the product descriptions, the best one was Neural-Net Language Models, the English version.

Using Tensorflow Hub models is really simple and can be done with just a few lines of code.

First, we of course import the tensorflow_hub library, then we define our embedding function, with an argument as a list of descriptions that need to be embedded. Then we define the URL from the Tensorflow Hub of the model we will be using to embed our text. Our embed function is created by loading the model from a previously defined URL. On the first launch, it may take some time to start, because our model needs to be downloaded first. In the end, we return just the NumPy array with vectors, because we don’t really need other data that embed function returns like shape, etc.

Integrating ElasticSearch with Python

After you set up your Elasticsearch using, for example, Docker and fiddle with it a little bit, you can easily integrate it with Python by Elasticsearch-py. Creating a connection is as simple as typing:

From there you can easily create new indexes, get or insert new documents. To use similarity search we need to have a field with the type of dense_vector. The number of dimensions is dependent on the model from TensorflowHub you are using. In this case, it’s going to be 128.

If you want, you can specify other fields and types, but it can be skipped, Elasticsearch will do it for us and recognize types when we will be inserting data.

Now we can dump the data into Elasticsearch, along with the vectors. Let’s create a list of documents that need to be sent to Elasticsearch. Remember to name the field with vectors the same as previously when creating the index.

Specifying _id is not required, Elasticsearch will generate a random one with an url-safe base64 algorithm, but I prefer to keep documents with the same ID as they have in my PSQL database.

Format_text function is the one that processes text by removing stopwords and other things mentioned above.

We add the embedded description to each request and use tolist() on it to get a classic Python array. Elasticsearch-py library comes with useful helpers like bulk document creation that we are going to use.

The first argument we pass is the Elasticsearch instance and the second is the list of requests we created. Now we have our index populated with data and vectors we will use for search.

Creating a custom score function for search

All we have to do left is create a custom score function for search. To compare our vectors we will be using cosine similarity. From Elasticsearch 7.3 cosineSimilarity function is available natively in its scripting language. An example query will look like this.

I like to specify which fields response should include to avoid seeing long arrays with vectors for each of similar records found. You can also specify how many similar documents should be shown in the “size” parameter. We need to embed text we are searching similar products for and pass it to queryVector. We use queryVector in cosineSimilarity alongside with descriptionvector from document to calculate the similarity, and the query is matchall one so it iterates over all documents in index. If you want to be more specific you can experiment with it. In the end, you need to add 1 to your score script, because Elasticsearch doesn’t support negative scores.

What’s left is just sending the request using the created query.

We will get a response with similar documents ordered by a similarity percentage. And really that’s all.

As you can see, implementing a basic similarity search is really easy and can help you with developing the POC of your project. Elasticsearch is a really powerful tool. For more accurate scores you will need to spend more time on text processing, choosing the model, or even training one by yourself.

.svg)